Deep Learning: Deep Generative Neural Networks

Here is my Deep Learning Full Tutorial!

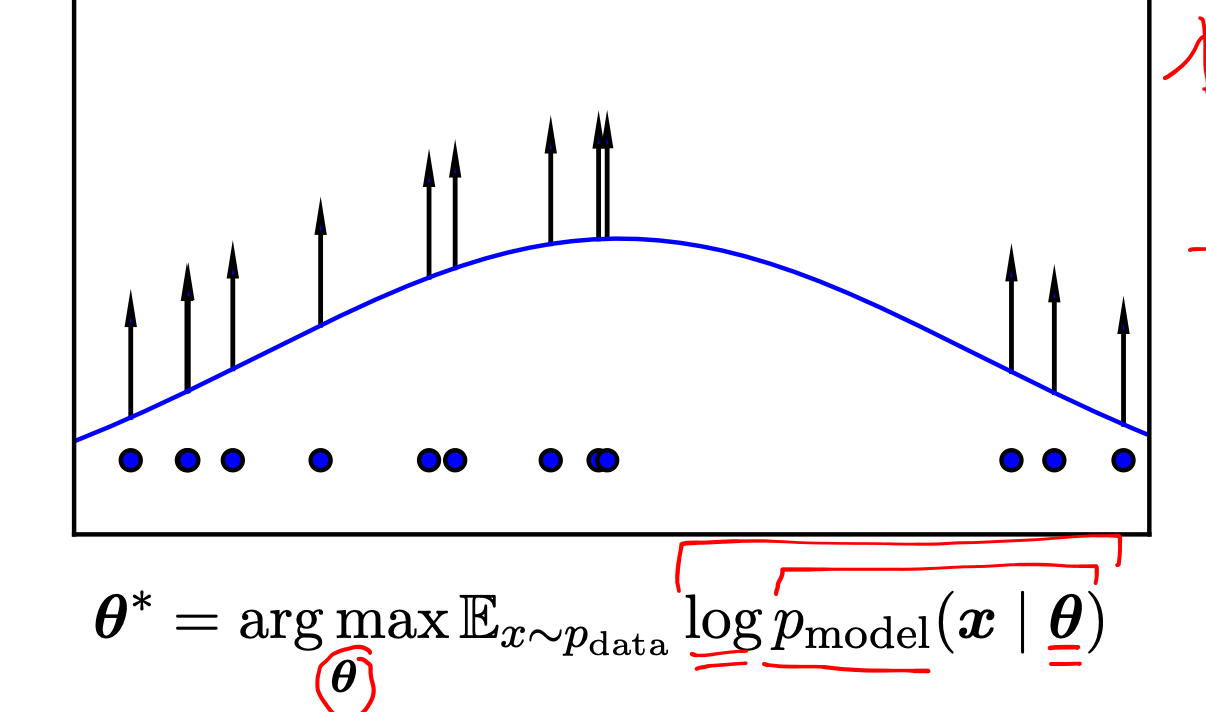

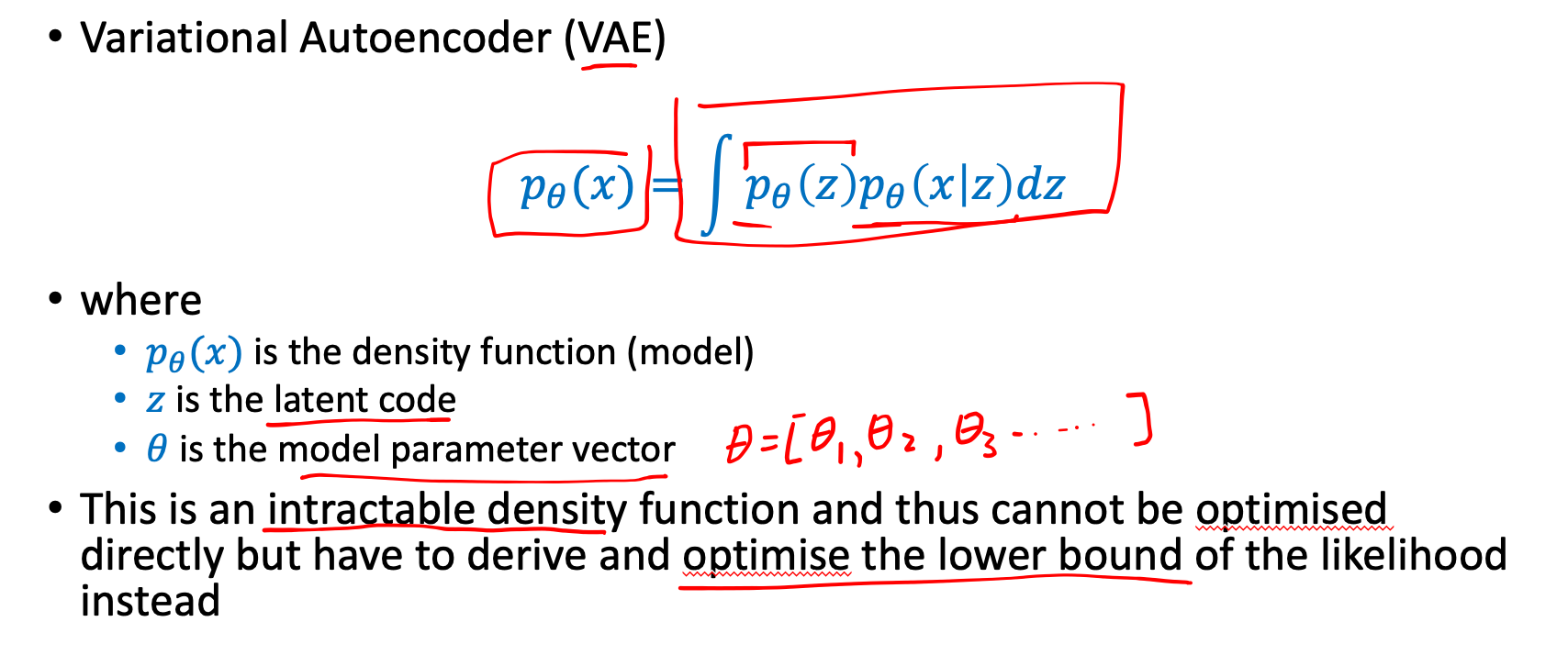

Maximum Likelihood

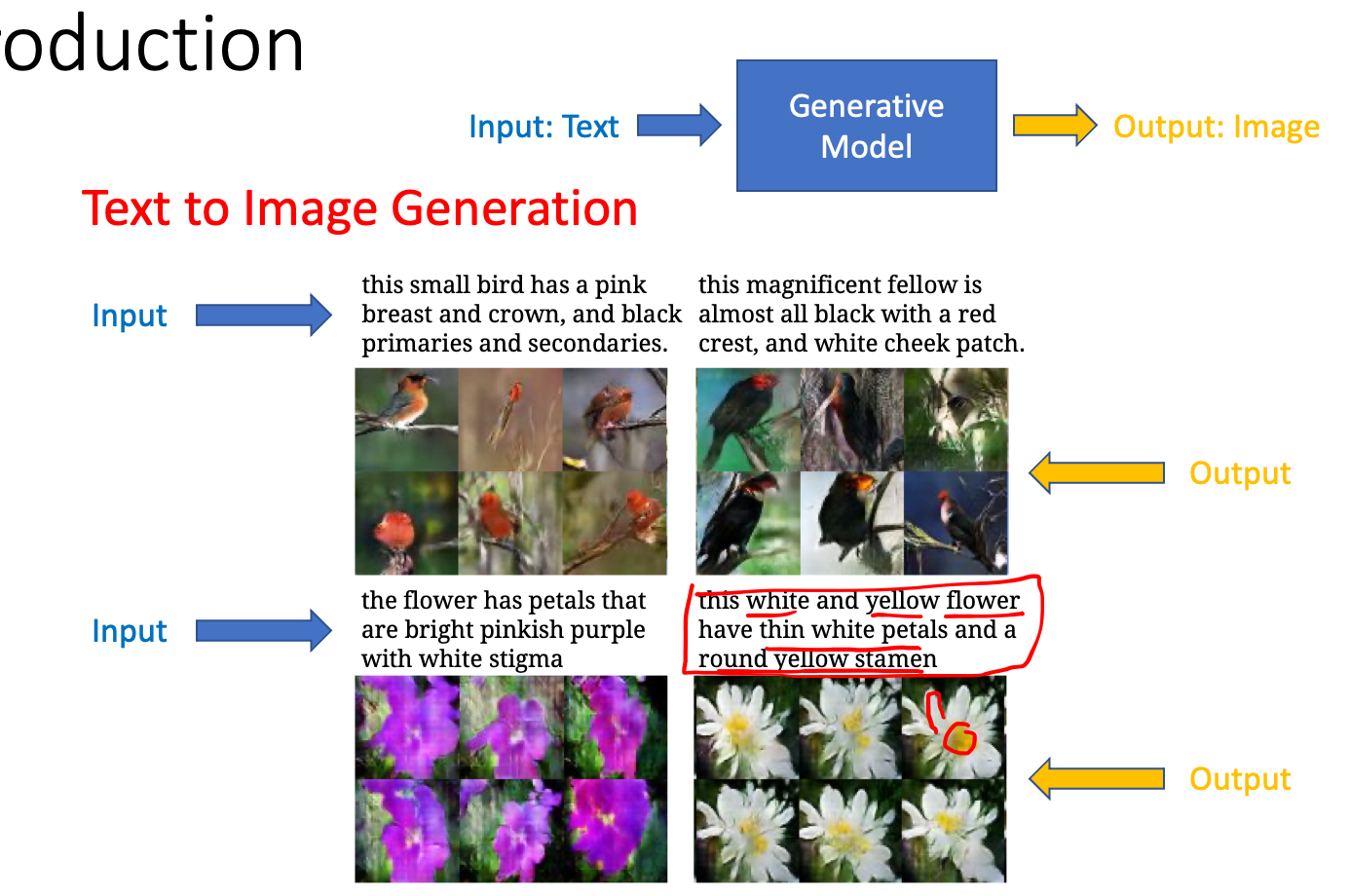

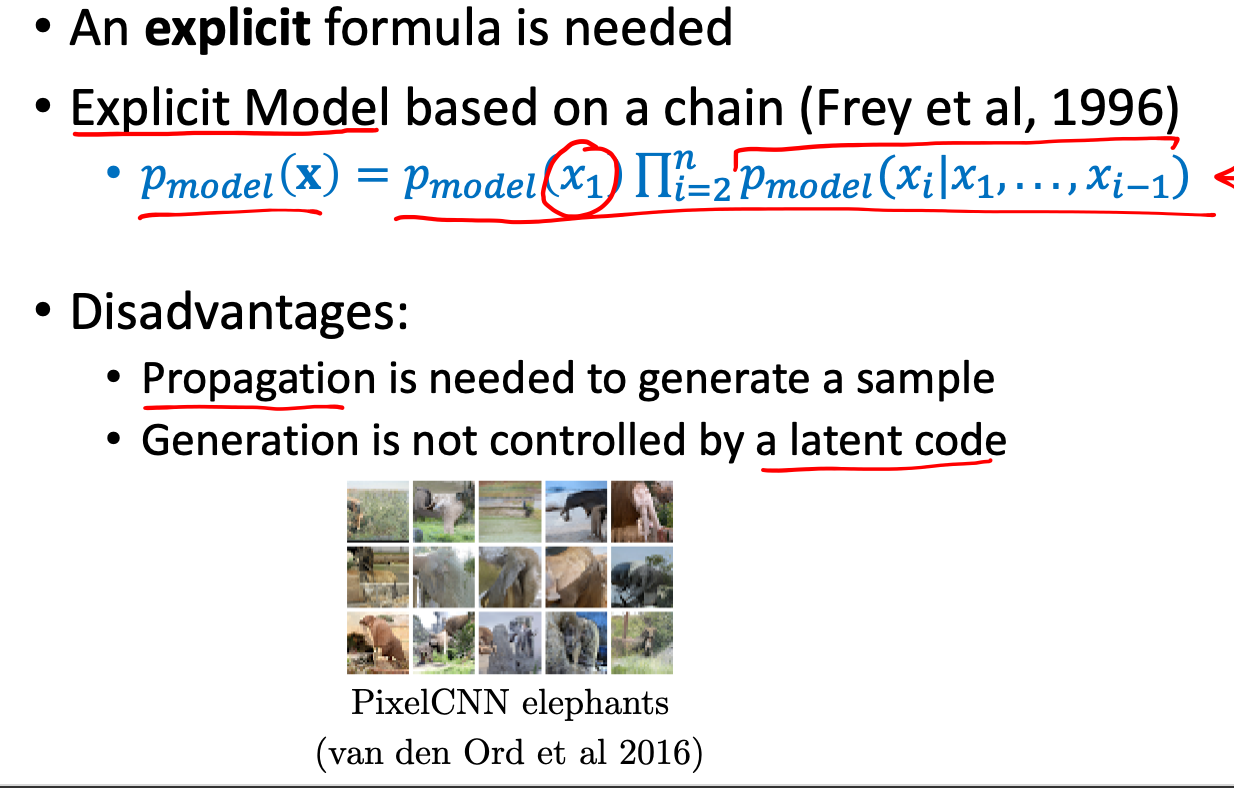

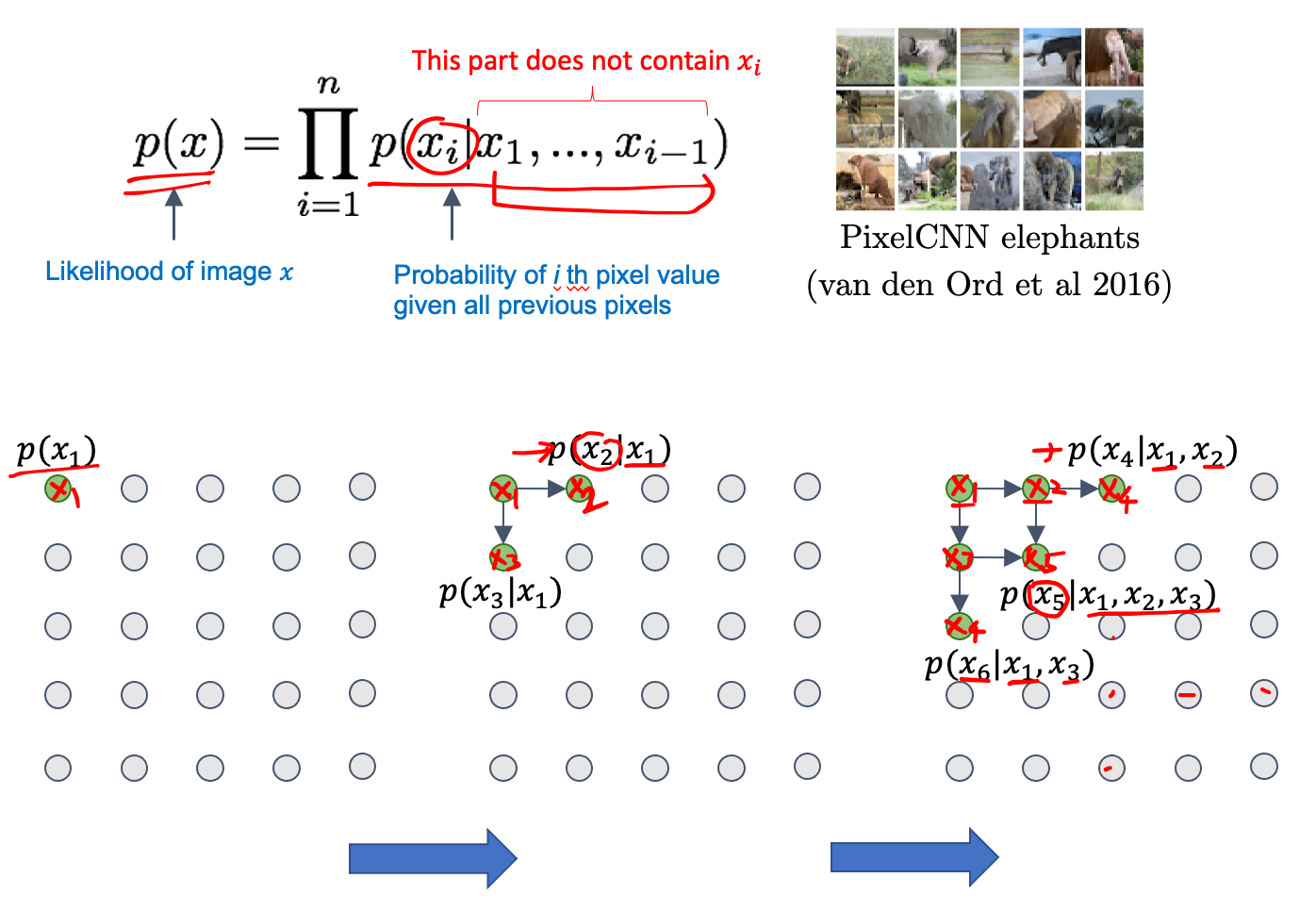

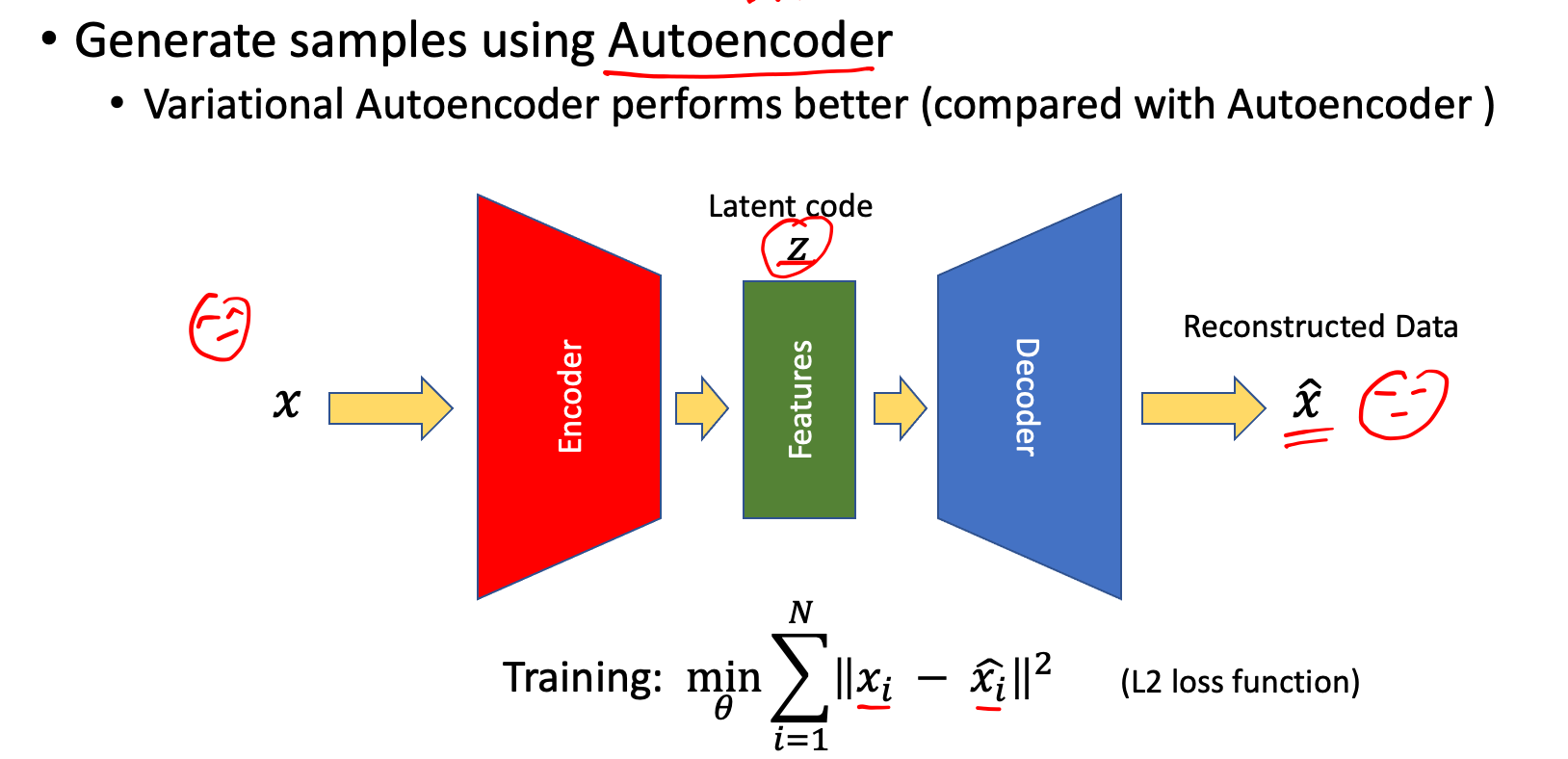

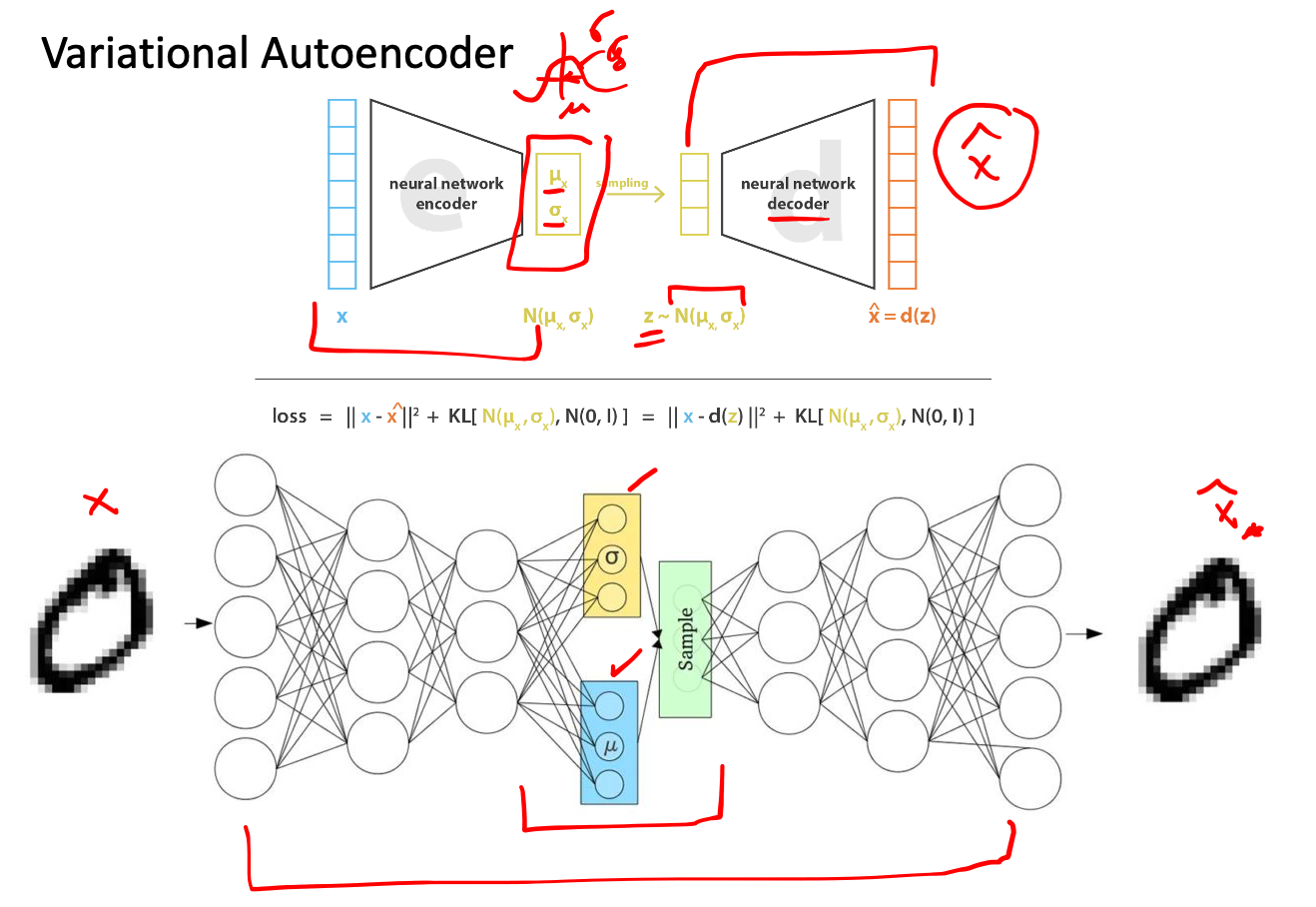

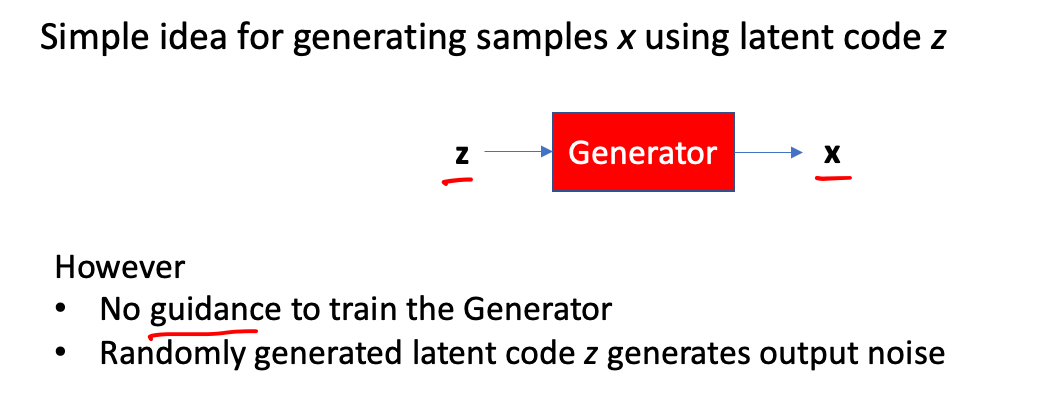

Generate image

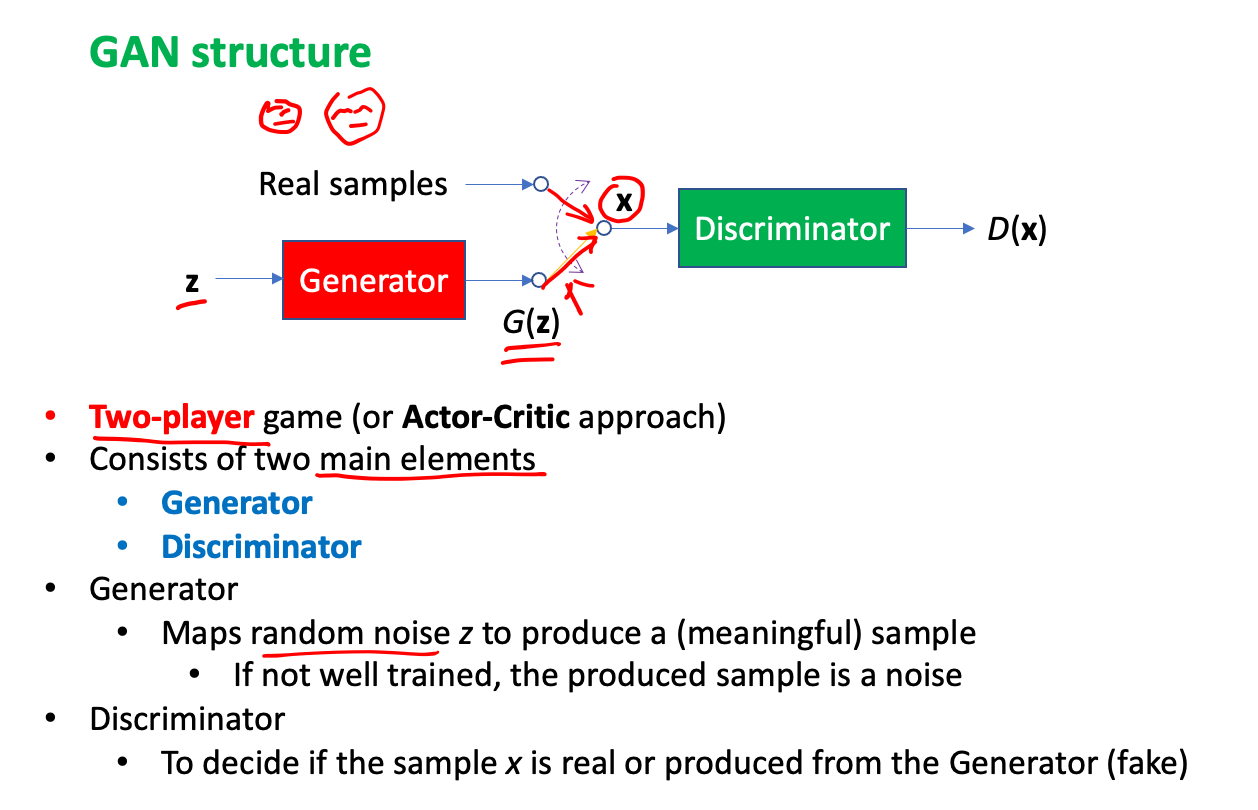

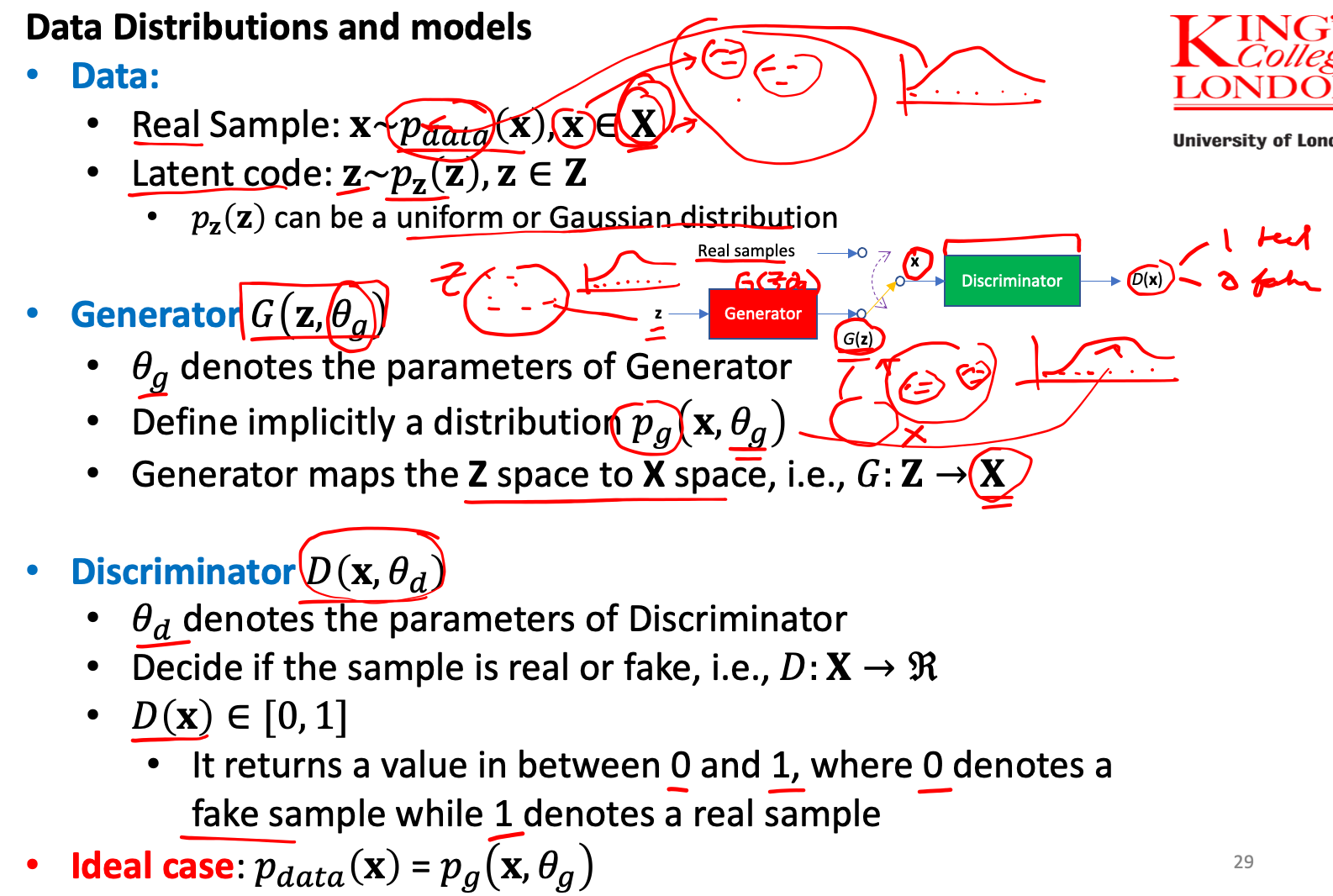

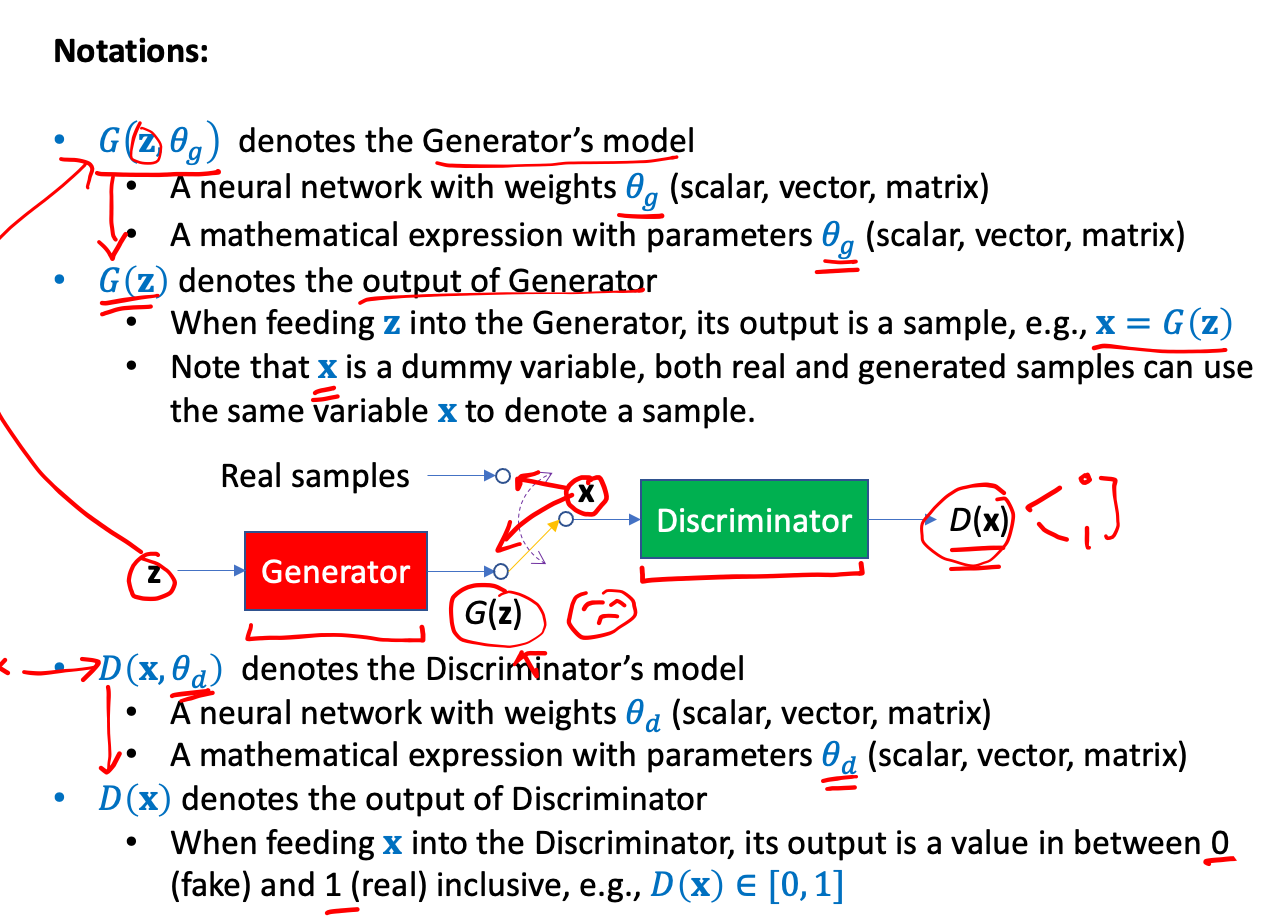

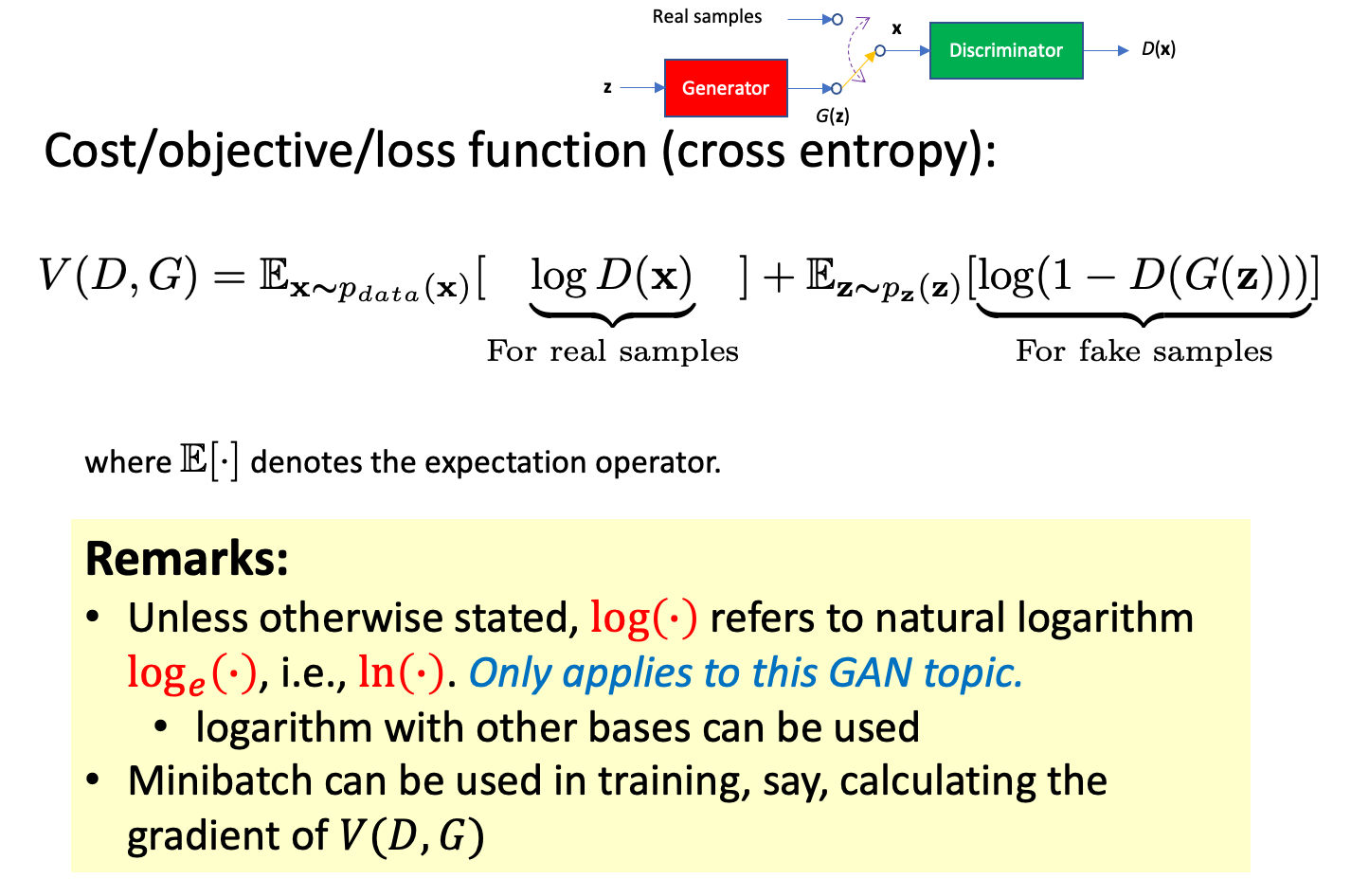

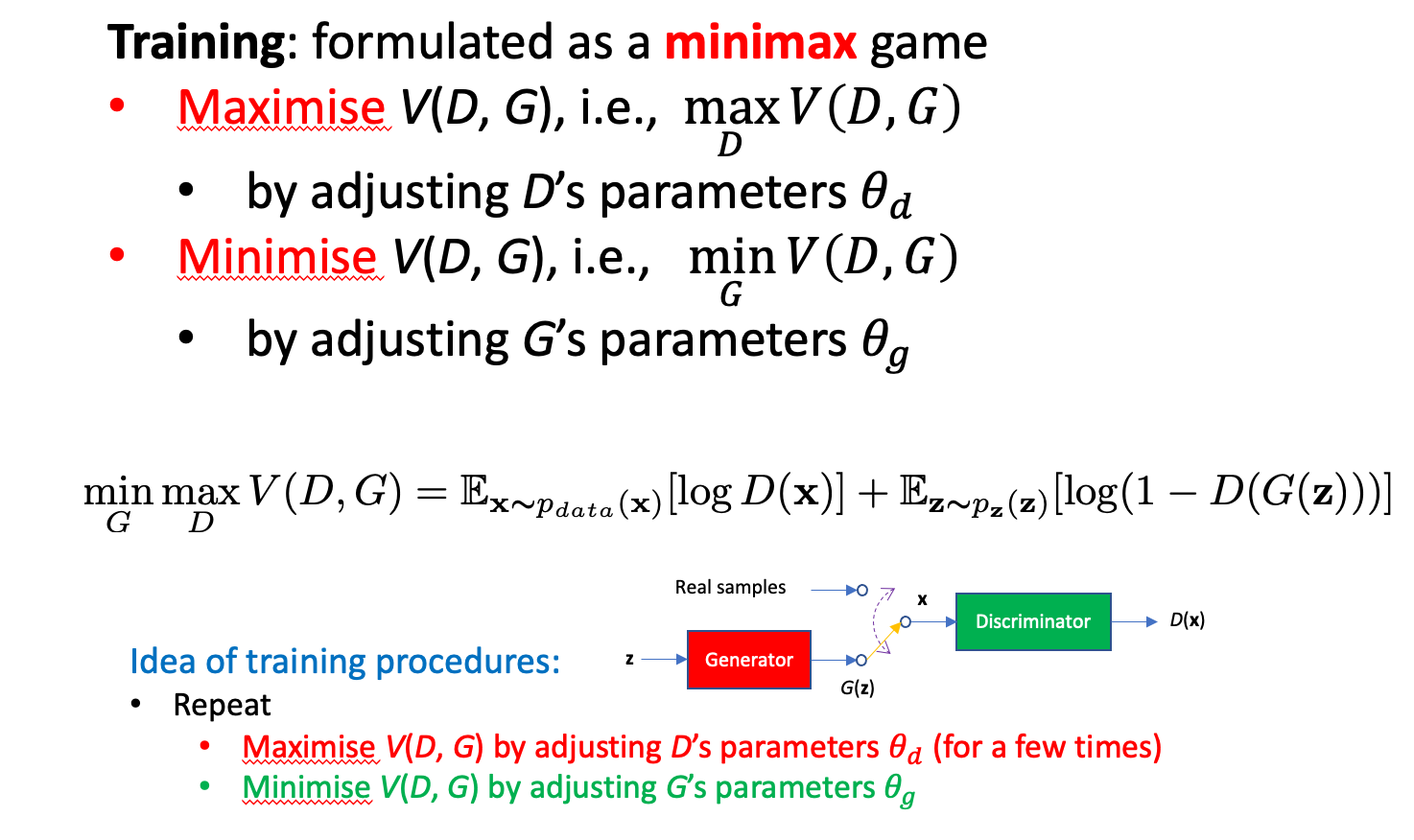

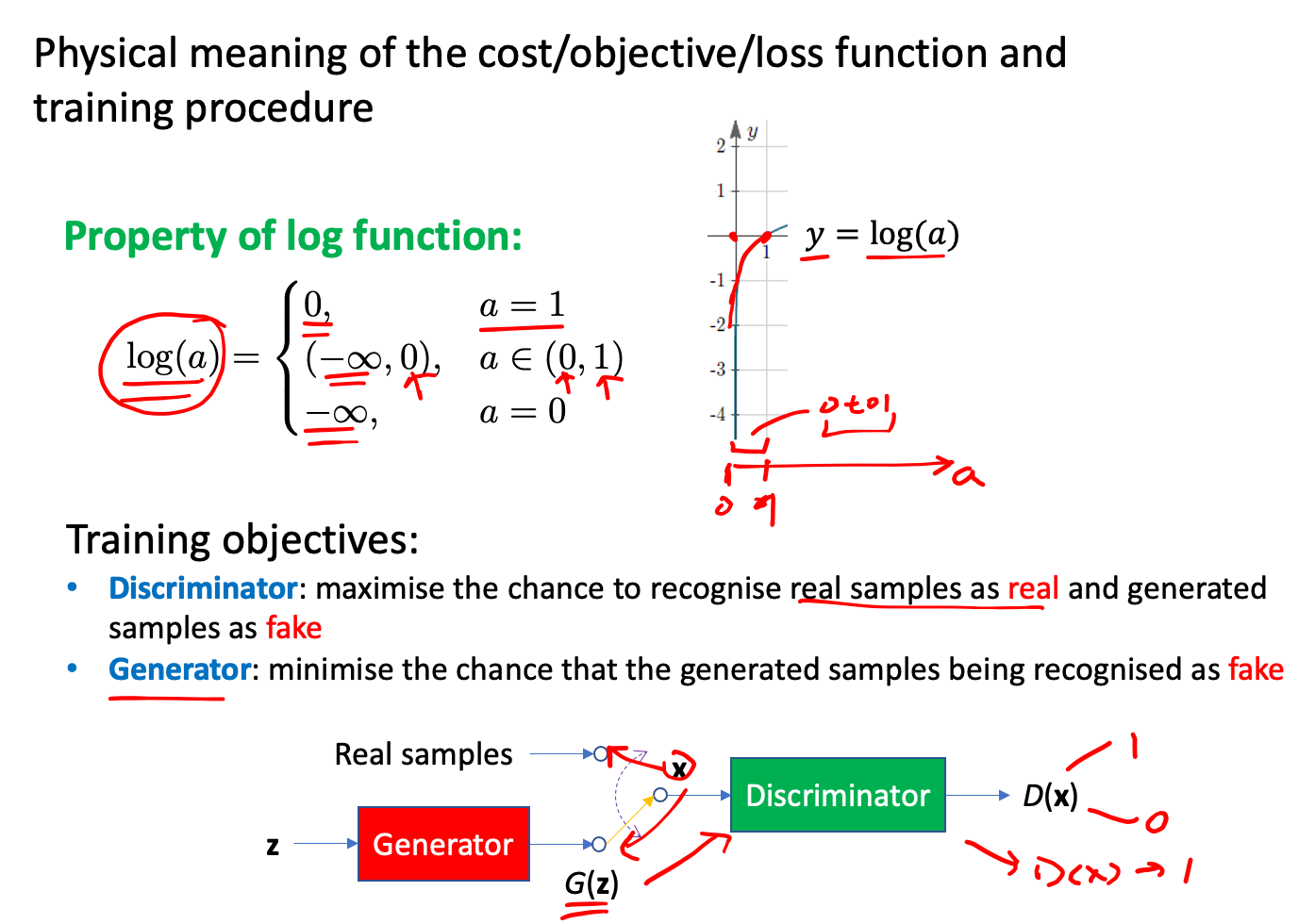

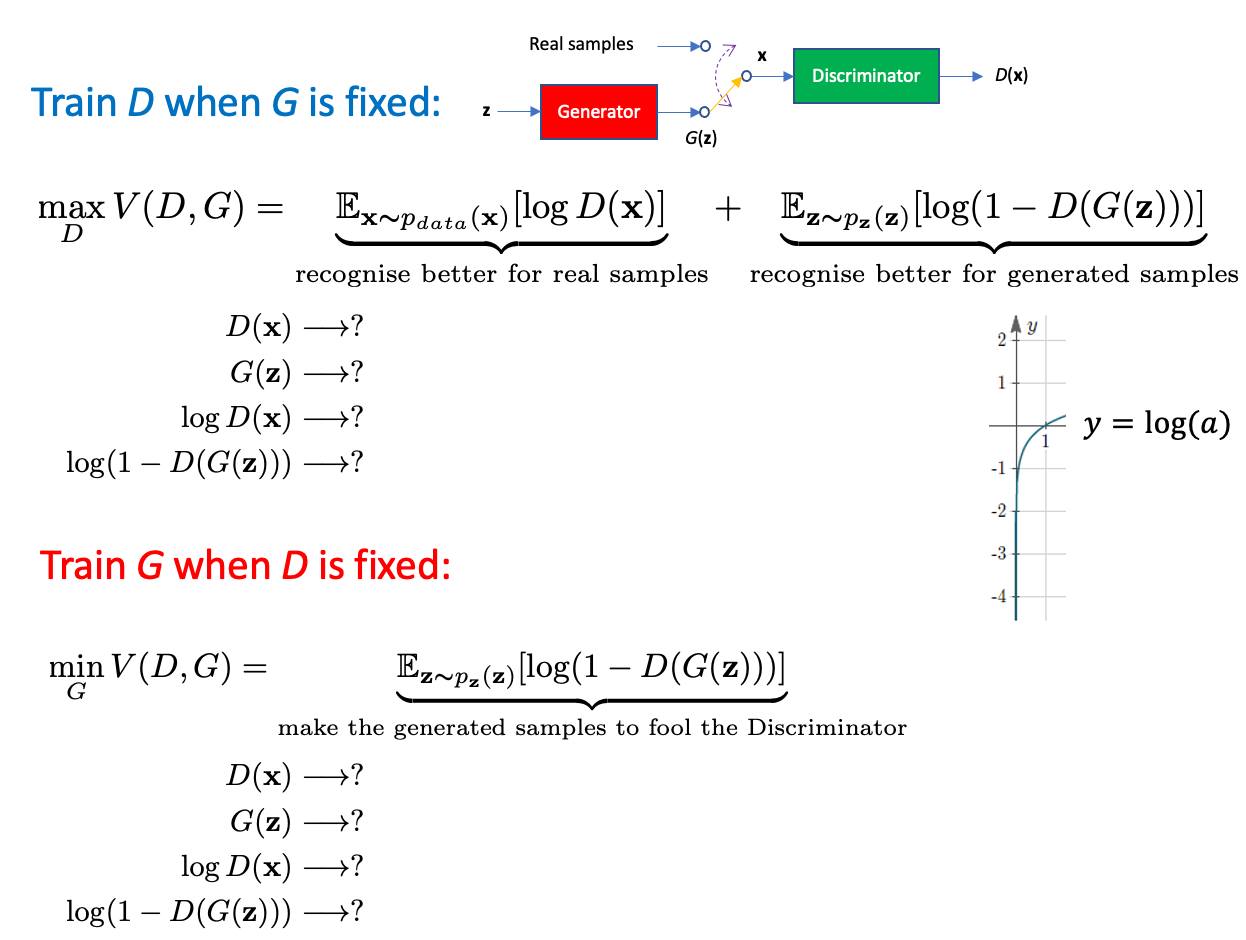

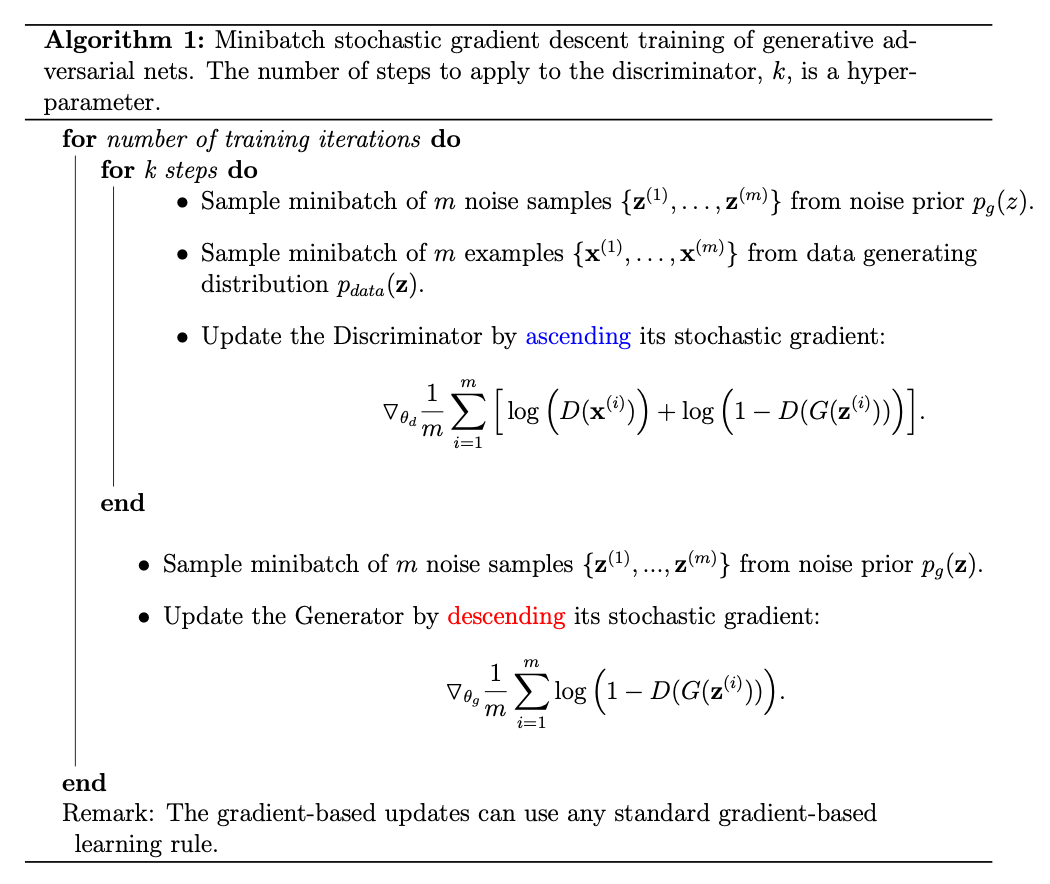

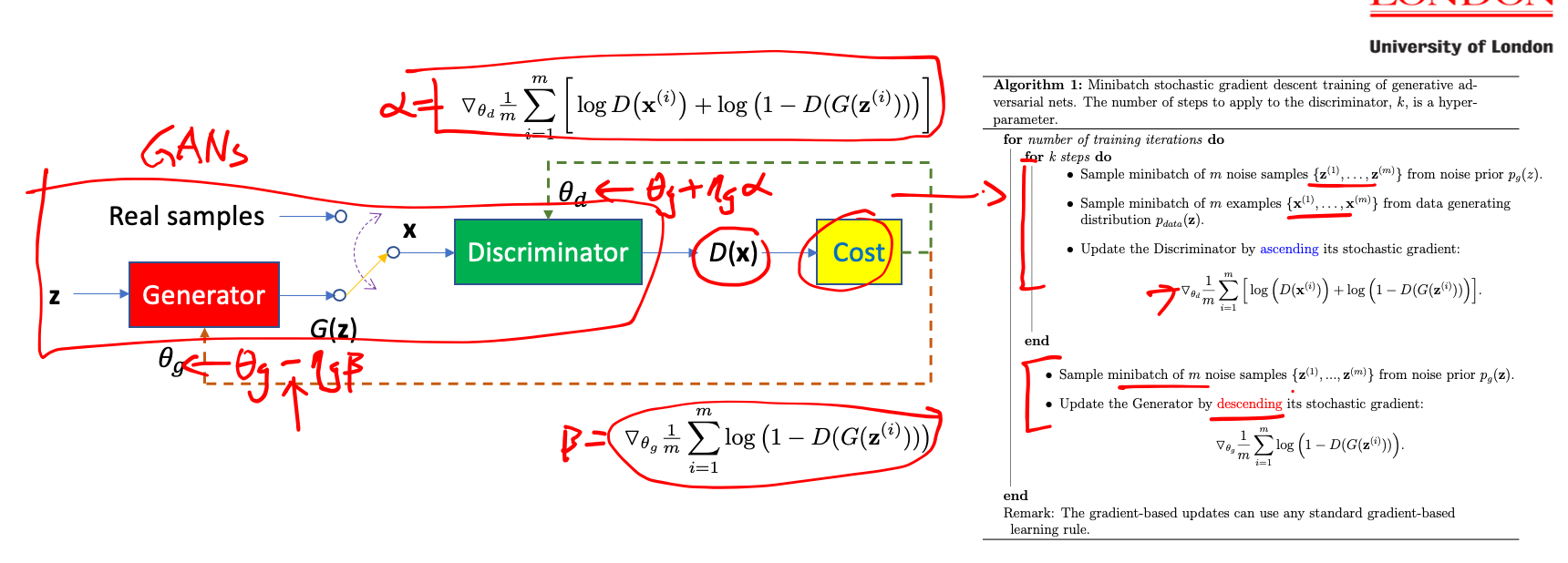

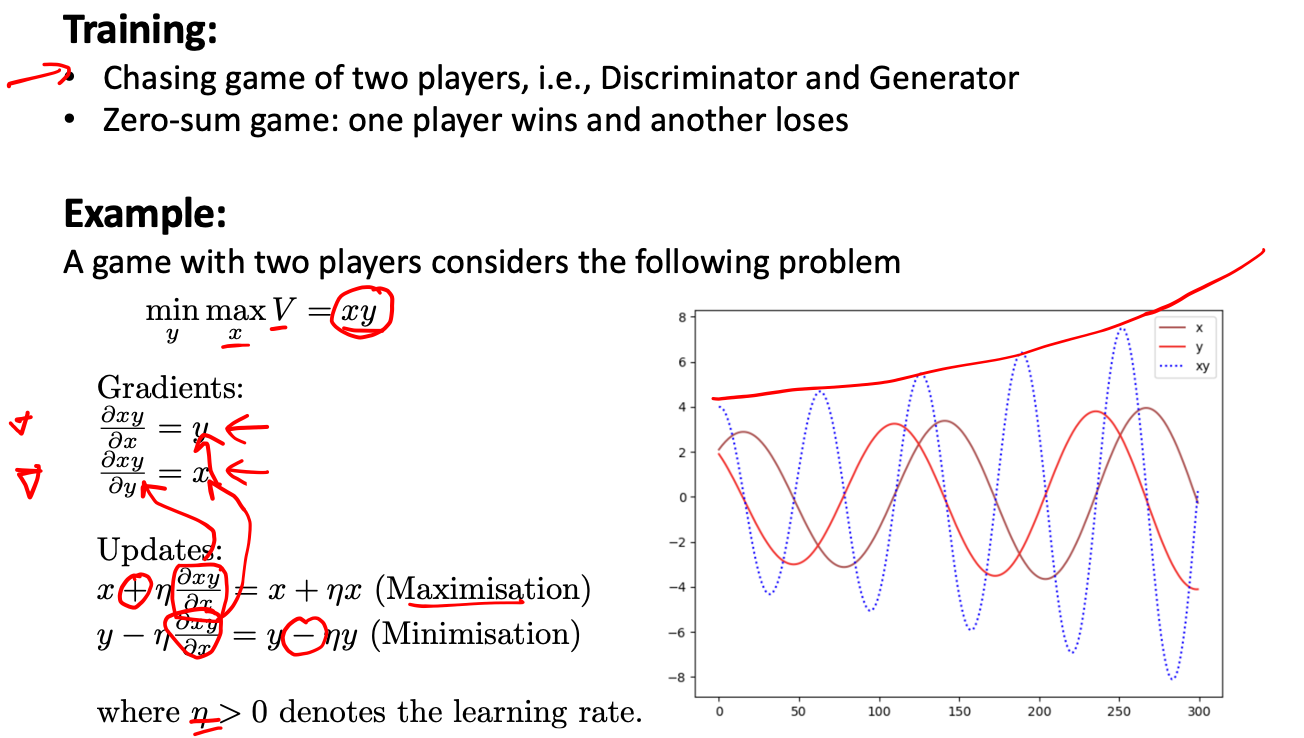

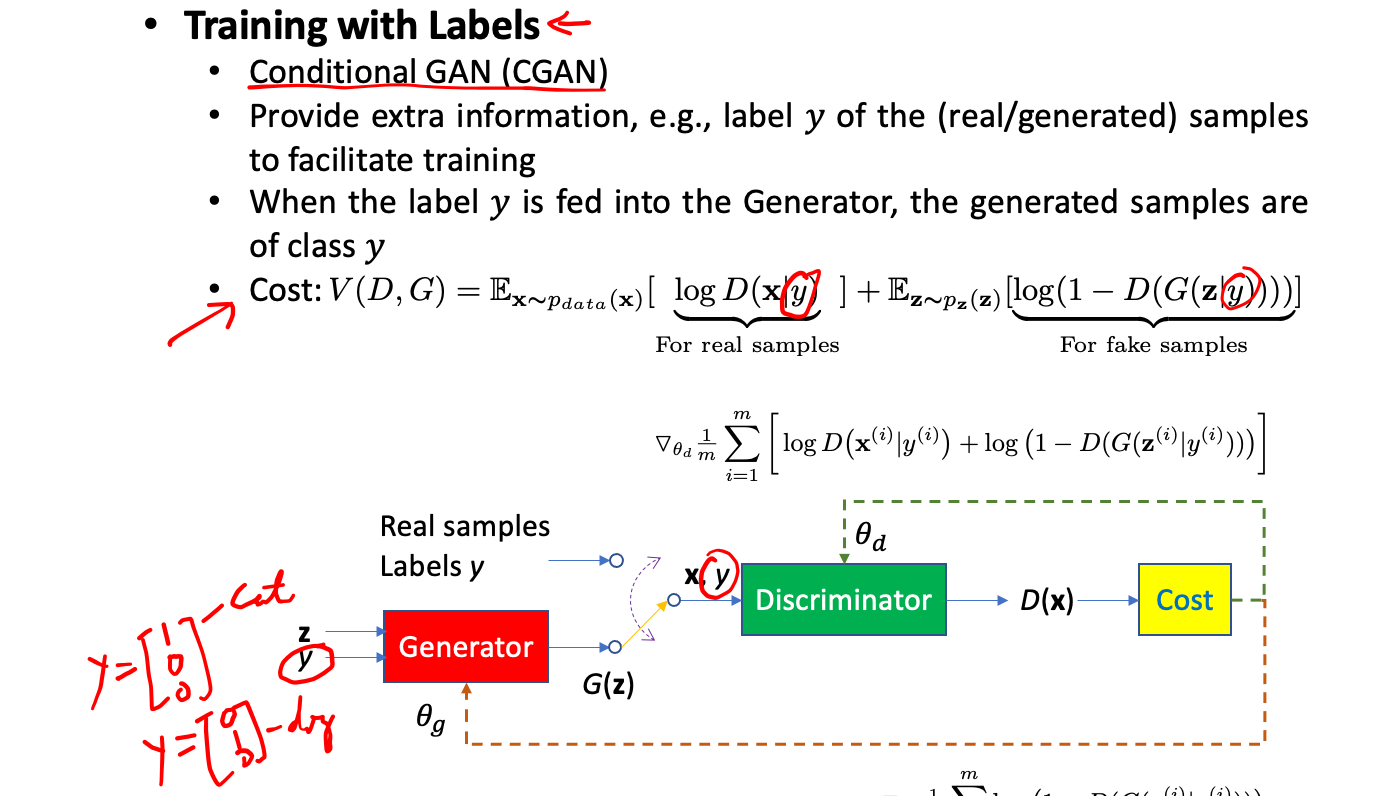

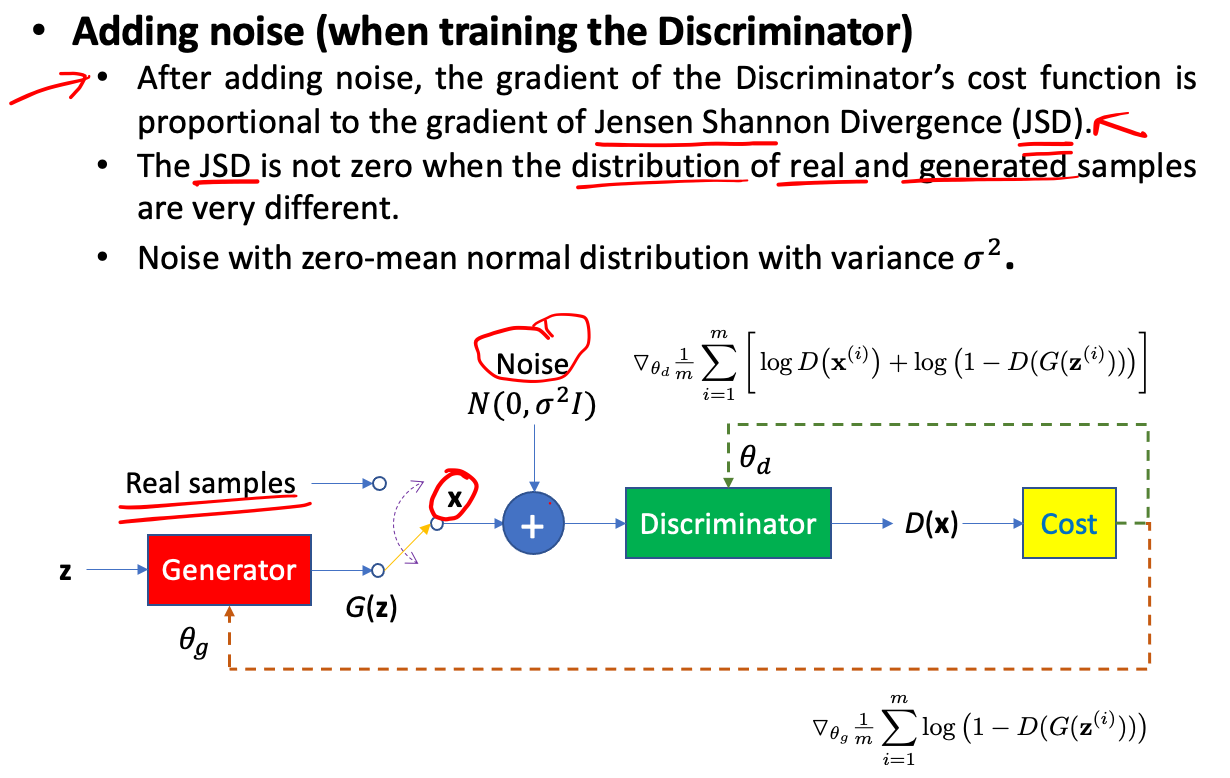

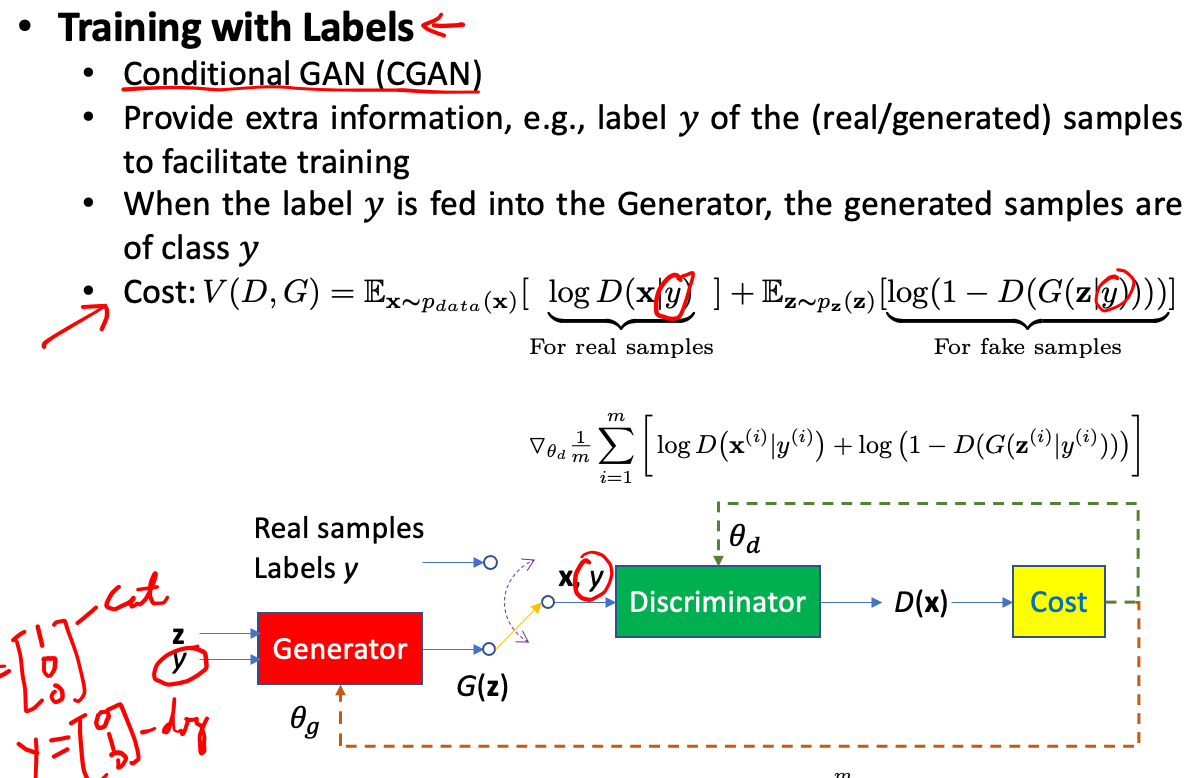

**Generative Adversarial Networks**

Generative Adversarial Networks python code

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

# calculating cost function

import numpy as np

X = [

[1,2],

[3,4]

]

X = np.array(X)

X_pred = [

[5,6],

[7,8]

]

X_pred = np.array(X_pred)

weight = np.ones(2)*0.5

# define of Discriminator

def Dx(x,a = 0.1,b = 0.2):

x = np.transpose(x)

return 1/(1+np.exp(-a*x[0]+b*x[1]+2))

# Calculate the cost

def V(x,x_pred = X_pred ,weight = weight,Dx = Dx):

# E(ln(D(x)))

E_lnDx = np.dot(np.log(Dx(X)),weight.transpose())

# E(ln(1-D(G(x)))),G(x) = X_pred

E_ln_1_DGx = np.dot(np.log(1-Dx(X_pred)),weight.transpose())

return E_lnDx + E_ln_1_DGx

print(V(X,X_pred,weight,Dx))

Output

1

-2.5465207684425333

Continue Python code

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

from jax import grad

import jax.numpy as jnp

import numpy as np

a = [0.1,0.2]

def Dm_1(a,x = X, b = 0.2):

temp = 1/(jnp.exp(jnp.dot(jnp.array([-a,b]),jnp.transpose(x)) + 2)+1)

return temp

def Dm_2(a,x = X[0], b = 0.1):

return 1/(jnp.exp(jnp.dot(jnp.array([-b,a]),jnp.transpose(x)) + 2)+1)

def multi_Dm_1(a,x):

result = []

for sample in x:

temp_func = grad(Dm_1)(a,sample)

result.append(temp_func)

return np.array(result)

def multi_Dm_2(a,x):

result = []

for sample in x:

temp_func = grad(Dm_2)(a,sample)

result.append(temp_func)

return np.array(result)

print(1/Dx(X)*multi_Dm_1(0.1,X))

Output

1

[0.90887703 2.77242559]

Continue Python code

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

# Calculate gradient update

from jax import grad

import jax.numpy as jnp

import numpy as np

X = [

[1,2],

[3,4]

]

X = np.array(X)

X_pred = [

[5,6],

[7,8]

]

X_pred = np.array(X_pred)

weight = np.ones(2)*0.5

# define learning rate

n = 0.02

a = [0.1,0.2]

def Dm_1(a,x = X, b = 0.2):

temp = 1/(jnp.exp(jnp.dot(jnp.array([-a,b]),jnp.transpose(x)) + 2)+1)

return temp

def Dm_2(a,x = X[0], b = 0.1):

return 1/(jnp.exp(jnp.dot(jnp.array([-b,a]),jnp.transpose(x)) + 2)+1)

def multi_Dm_1(a,x):

result = []

for sample in x:

temp_func = grad(Dm_1)(a,sample)

result.append(temp_func)

return np.array(result)

def multi_Dm_2(a,x):

result = []

for sample in x:

temp_func = grad(Dm_2)(a,sample)

result.append(temp_func)

return np.array(result)

def d_V(x,x_pred = X_pred ,weight = weight,Dx = Dx):

# d(ln(D(x)))/dm1 + d(ln(1 - D(G(x))))/dm1 = d(ln(D(x))/d(D(x)) * d(D(x))/dm1+

# d(ln(1 - D(G(x))))/d(1 - D(G(x))) * d(1 - D(G(x)))/d(D(G(x))) * d(D(G(x)))/dm1

d_m1 = 1/Dx(x) * multi_Dm_1(a[0],X) + 1/(1-Dx(x_pred)) * (-1) * multi_Dm_1(a[0],x_pred)

# d(ln(D(x)))/dm2 + d(ln(1 - D(G(x))))/dm2 = d(ln(D(x))/d(D(x)) * d(D(x))/dm2+

# d(ln(1 - D(G(x))))/d(1 - D(G(x))) * d(1 - D(G(x)))/d(D(G(x))) * d(D(G(x)))/dm2

d_m2 = 1/Dx(x) * multi_Dm_2(a[1],X) + 1/(1-Dx(x_pred)) * (-1) * multi_Dm_2(a[1],x_pred)

# sum and apply weight

result = np.dot([d_m1,d_m2],weight)

return result

update = d_V(X)

a_new = a + n*update

print(a_new)

Output

1

[0.13001361 0.15280747]

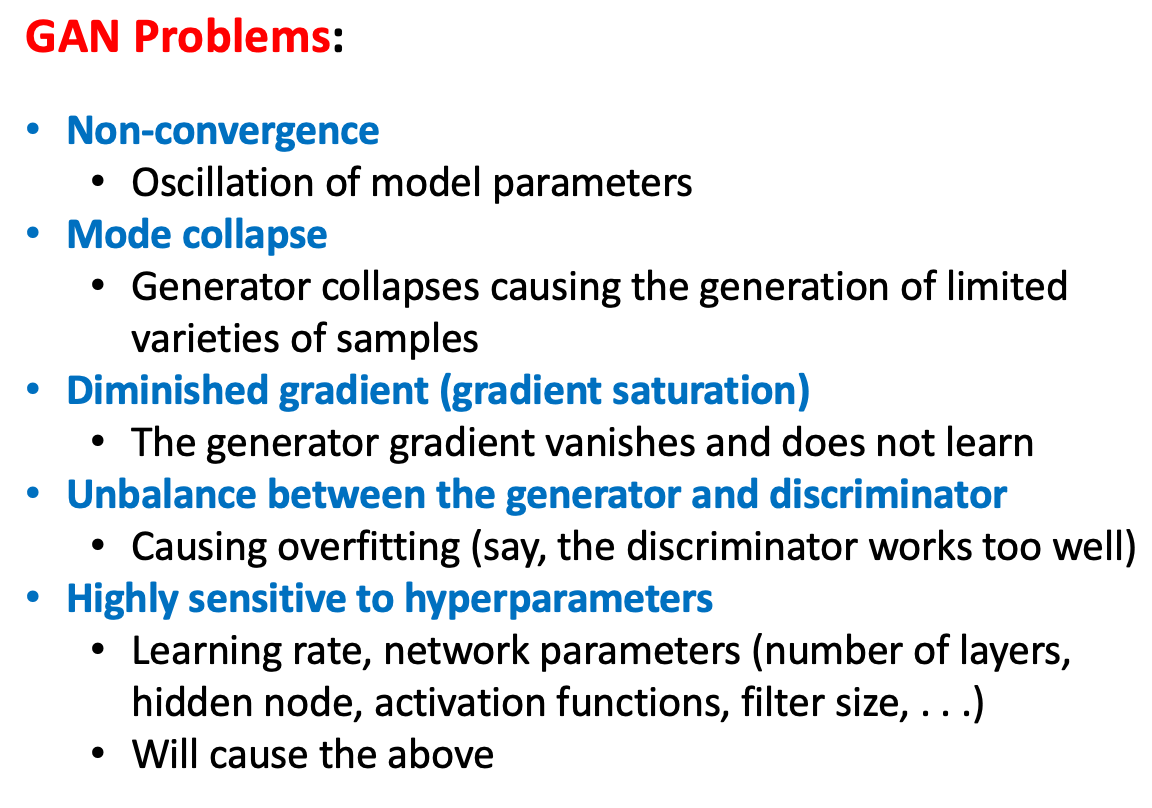

GAN Problems

None-convergence

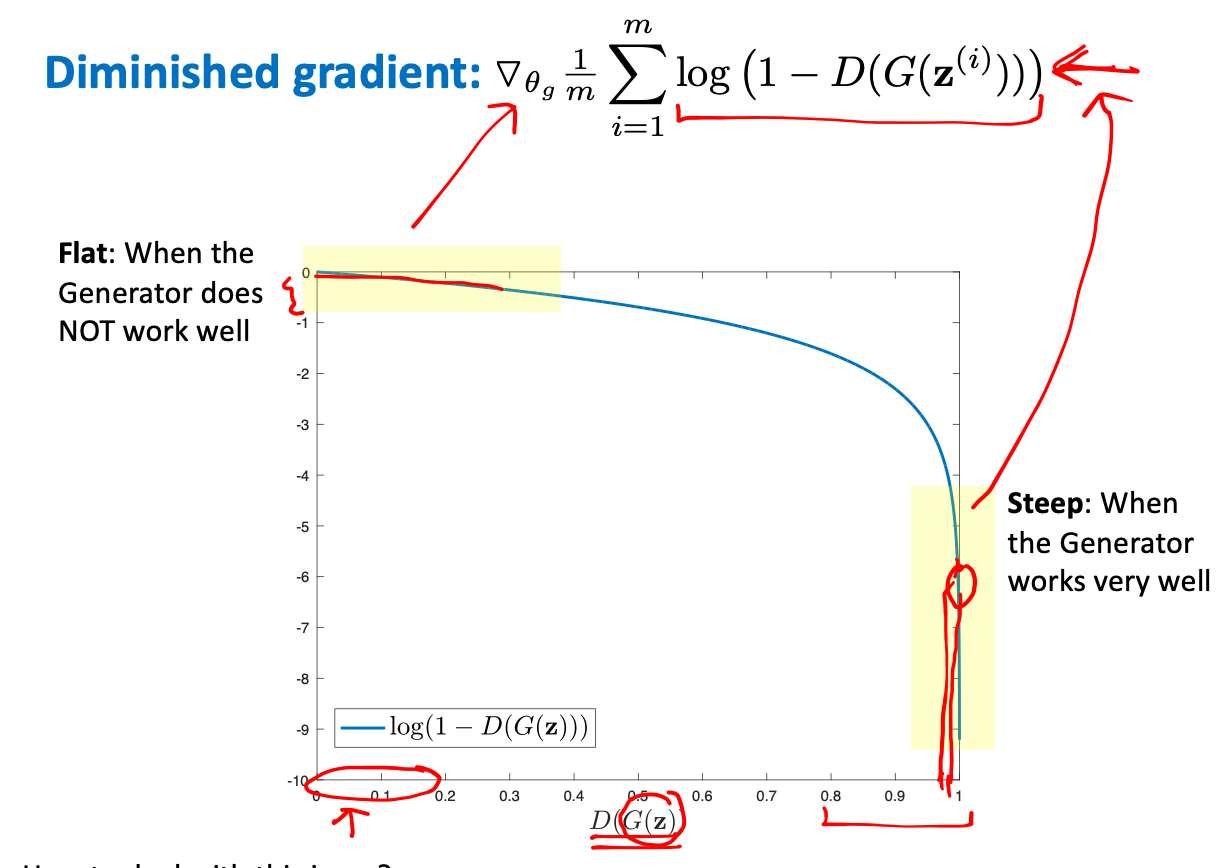

Diminished gradient

Comments powered by Disqus.